Docker, Kafka, Mulesoft, Platform Events, IoT Cloud - All together!

UPDATE: By popular demand, I want to note before I begin that the solution described here is not necessarily the optimal for the scenario presented below. It is meant to be an example of how all these tools can coexist in case they're part of an enterprise landscape. Especially with Salesforce's acquisition of Mulesoft, this very well might end up being the case for some enterprises. Toward the bottom of the post, there's a section with a few bullet points on what the solution could look like (including serverless options, of course), but it's a long post and I can appreciate that not everyone will get there!

The title says it all!

In this post, I'll walk through an example of how Docker, Kafka, Mulesoft, Platform Events and IoT Cloud could work together, to create a really powerful landscape for companies with IoT use cases.

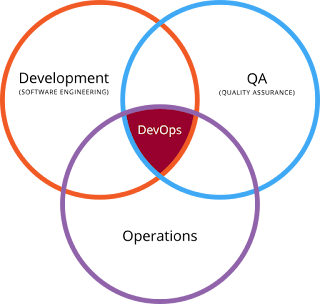

I thought of writing this one because integration of different systems is one of my biggest interests (alongside DevOps). I find that streaming events in real time is extremely useful, cool and it has even been trending lately, with the great evolution of tools like Apache Kafka.

This post is not meant to be super advanced on what any of these technologies can do individually (that would probably take many posts for each!), it's more meant to open a lot of possibilities to anyone that is interested in IoT and how streaming events in real-time can be beneficial in a multitude of scenarios.

Disclaimer: there's an IoT cloud trail on Trailhead that has a similar use case, but it only focuses on IoT cloud, it doesn't touch on Kafka or Mulesoft, for example. Similarly, there might be some Kafka tutorials out there that handle equivalent basic use cases, but they won't touch on MuleSoft and Salesforce IoT.

UPDATE: As mentioned in the opening note, I wanted to put all of these technologies together in one place, in order to show the power they can help unleash and the huge amount of options and features they can open up, but it doesn't mean that they're all needed in this particular scenario. I just hope it doesn't get too convoluted, because I had a lot of fun doing it!

Please let me know your thoughts, as usual, and see you in the next one!

The title says it all!

In this post, I'll walk through an example of how Docker, Kafka, Mulesoft, Platform Events and IoT Cloud could work together, to create a really powerful landscape for companies with IoT use cases.

I thought of writing this one because integration of different systems is one of my biggest interests (alongside DevOps). I find that streaming events in real time is extremely useful, cool and it has even been trending lately, with the great evolution of tools like Apache Kafka.

This post is not meant to be super advanced on what any of these technologies can do individually (that would probably take many posts for each!), it's more meant to open a lot of possibilities to anyone that is interested in IoT and how streaming events in real-time can be beneficial in a multitude of scenarios.

Disclaimer: there's an IoT cloud trail on Trailhead that has a similar use case, but it only focuses on IoT cloud, it doesn't touch on Kafka or Mulesoft, for example. Similarly, there might be some Kafka tutorials out there that handle equivalent basic use cases, but they won't touch on MuleSoft and Salesforce IoT.

UPDATE: As mentioned in the opening note, I wanted to put all of these technologies together in one place, in order to show the power they can help unleash and the huge amount of options and features they can open up, but it doesn't mean that they're all needed in this particular scenario. I just hope it doesn't get too convoluted, because I had a lot of fun doing it!

Scenario

The scenario I'll be focusing on for this post is the following:About the company

- The company we're working with is called Ivan's Bridges and it's an engineering firm that builds and manages smart bridges (proper bridges in motorways, for example)

- The bridges have weight sensors and are connected to Ivan's Bridges' network

- The bridges can send events via HTTP Post every-time the weight they carry changes and stays in a given range for a specified amount of time. This means that not all changes will be sent to this endpoint, just changes that matter (because limits and all)

High level requirements

- Ivan's Bridges want to manage their assets and have an indication in Salesforce of which ones have had too much weight for the specified period of time and need urgent fixing (or clearing). The bridges might collapse if not handled properly!

- They also want to automatically create a case when this happens and close it in case the issue gets fixed before the agent gets there (or if the agent forgets, but they don't do that, do they?)

- They also want to be flexible and future-proof the way the events are handled, as they expect other systems will need to subscribe to and publish them, as other tasks need to happen outside of Salesforce

Components/Tools that form the solution

Note: the solution is, of course, not production ready and I'll mention areas of improvement in a section below, but it's good enough to illustrate the points I want to make.Components hosted on Ivan's Bridges network (localhost in this case, but it could be anywhere!)

- Docker: why build it yourself, when there's a container for that? If you don't know what Docker or containers are, you need to start learning ASAP. To put it in as high level terms as I can, containerisation allows for apps to be packaged together with all of their dependencies (even OS needs). Docker is just a specific container platform, there are many, and it gives you the runtime and tools to create, manage, install and run containers, independent on the platform docker itself runs on. A great benefit from using this technology is that it enables the use of the Docker hub, where you can search and find an amazing range of libraries with public container images, ready to use and only a couple of commands away. Anyway, we'll need docker installed in the machine, as we'll be getting Kafka from the Docker Hub. Check Docker and Docker Hub for more information.

- Apache Zookeeper: quoting their page: "ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services". It's a dependency for synchronising and handling the Kafka configuration, so we'll go ahead and install that. While there's a possibility of having multiple Zookeepers, for availability and scalability purposes, I'm going to keep it very simple here, with just one on my local machine (keep reading to find out how I installed it)

- Apache Kafka: Kafka is pretty cool, I don't know how I lived without it for so long. If you have or need to have real-time integrations, look no further, start streaming events! In a nutshell, Kafka is just a system that allows you to receive and send messages between many systems. Put it this way, it doesn't sound so special, but the following key capabilities make it extremely useful, and it has more, of course:

- You can have one or many Kafka brokers, i.e. you can have a cluster of Kafka servers in order to handle massive event loads

- You have the ability to create many topics, which are the channels on which to subscribe and publish events

- You can partition the topics, to allow multiple brokers to handle topics in a distributed (and super efficient) way. Zookeeper manages leaders and followers for the partitions and so on

- You can create consumers and producers, subscribing and publishing events to each topic, they can be anywhere, they just need to be able to reach the brokers and the Zookeeper

- It allows you to manage how consumers stream the events, it knows the last one that was received by each consumer, it knows the offsets, it knows when consumers stop streaming and it allows you to resume where it stopped, for each consumer. Of course, there are limits on the amount of time the events live in the stream, but still, this is pretty cool

- As I mentioned above, why build stuff yourself, if you have containers for that? Conveniently, I went to the Docker Hub, searched for Kafka, and I found this image. Easy enough, I followed the instructions and it provides both Zookeeper and Kafka in one go. I followed the single broker instructions for simplification purposes (just clone the repo, replace an IP with localhost in one file and then run one command, that's it). It's like magic!

- MuleSoft: I got a trial account, installed Anypoint Studio, installed the Kafka connector from the exchange and cracked on (it has some pre-requisites, but you get the point, more info here)

Components that live on Salesforce

- Platform events: it's "almost" like having your own Kafka on Salesforce, publish, subscribe, stream. Salesforce handles the bus, it's cool stuff, check it out if you haven't yet

- Assets: just for reference data, they represent the actual bridges in the scenario. We need an external Id called Bridge Id, which we'll use to map platform events to assets

- Salesforce IoT: we need to enable this feature, to gain use of Contexts and Orchestrations

- Context: this is where you set up the events and reference data that orchestrations can access and the keys on how to map them all together

- Orchestration: this is where you configure the states and rules that act on the events and data specified on the Context created before

- We'll also create Cases in certain states and send a type of platform event through a process created with Process Builder

How the solution is implemented

OK, this all sounds pretty cool (in my opinion, of course), but there are a lot of things going on. In this section, I'll show you how I designed the solution so they work together nicely.

I'll show how I configured each item later on, but first I want to run through the processes that exist in the solution. There are four major flows here:

First flow: Bridges producing weight events

- Smart bridges send events to Kafka in a specified format

- These are captured and transformed in Mulesoft to the format that Kafka requires

- Mulesoft produces the Kafka events to the "weight" topic

- Mulesoft consumes the Kafka events and transforms it to the platform event created for this purpose (Bridge_weight__e)

- Mulesoft sends the platform event to Salesforce, inserting a record with the connector, as it would with any other object

Second flow: Salesforce IoT orchestration flow

- Bridge weight event is received in Salesforce

- There's a rule that checks that the weight is not over the maximum specified in the asset, if it's not, it goes to the "All good" state, as a starting point

- When an event is received that includes a weight that's over the maximum, there is a transition to the "Too much weight" state, and a case is created automatically with the proper information

- The case will then be assigned to an agent (I didn't configure assignment rules for this post, but you know how to do that)

- When the case is being handled and it's Status changes to "Working", a process that creates a platform event of the type Fix_event__e is triggered (Kafka also handles this event, details below, on the third flow definition)

- There's a rule waiting on this platform event that has a transition to the "Fixing" state

- Eventually, when the bridge is fixed (or cleared of the excessive weight), an event will come in with the new weight value, which should at this point be under the maximum

- When this happens, a platform event of the type Bridge_is_fixed__e is created (this one is not passed through Kafka in this scenario, but it certainly could, as other systems likely need to subscribe to it. For simplicity, I didn't add that requirement to the current solution). What happens to that event is explained in the fourth flow below

Third flow: Kafka receives the event that a bridge is being fixed

- Mulesoft, using the Salesforce connector, subscribes to the Fix_event__e platform event that was created by the process above

- The payload is transformed to the format agreed for Kafka and an event is produced on the "fixing" topic

- Mulesoft also has a consumer streaming these events from the "fixing" topic

- At this point anything could happen, with other systems and so on, I just have a logger created for now, so be creative!

Fourth flow: Close all cases automatically when bridge is fixed

- Mulesoft, using the Salesforce connector, subscribes to the Bridge_is_fixed__e platform event that was created by the orchestration in the second flow, mentioned above

- Mulesoft queries all open cases for the bridge that triggered the event (this could use some refinement, to avoid closing cases like crazy, but you get the idea)

- Mulesoft transforms the payload, changing the status of the selected cases to "Closed" and updates the records in Salesforce

Config details

Below you'll find some screenshots of the config items for the flows above (don't want to get in a detailed step by step guide here, there are lost of guides and trails for that, brilliantly written):

Common config: Platform events

First Flow

Anypoint Studio config

Mulesoft HTTP config

Mulesoft Kafka connector config

Mulesoft Salesforce connector config

Second flow:

IoT cloud context

IoT cloud Orchestration

Process builder to create Fixing event

Third flow

Anypoint Studio config

Fourth flow

Anypoint Studio config

Note: before you mention it, yes, it could use some error handling, some testing and some prettifying, but I think it gets the points across.

What it looks like

In this section, I'll add some screenshots of the whole solution and how it works.

Simulating the bridges sending events through postman (maximum weight for bridge 1 is 1000)

All our bridges are good!

After simulating with postman sending more weight than the maximum allowed, we see that we have one bridge failing

A case was automatically created and we go and update the status manually

The bridge has transitioned into the "Fixing" state

Log from Mulesoft when consuming from Kafka "fixing" topic

After simulating the weight again to be under the maximum allowed, everything goes back to good!

The case was closed automatically

And that's that!

What it could look like

There are a few points that could be better and that would need to be implemented to take it to a production-worthy state, a few from the top of my head:

Thanks for sticking with me all the way to the end, hopefully it didn't get too confusing.- "Bridge Fixed" platform events should probably go through a Kafka topic as well, but I wanted to simplify a bit the amount of config and screenshots needed

- Depending on the load (and even just for scaling and high availability), Kafka should live in a cluster, with multiple synchronised Zookeepers. This can get hard to manage, so you'll need (at the very least) something like Kubernetes or Mesos (with Marathon and what not) to orchestrate your containers and manage the clusters. I'm really into Kubernetes right now, so a post on how I'm initiating myself on it will likely follow, soon-ish

- Of course, because you probably know me by now, we need to run the implementation of all of this through our DevOps tools and processes, otherwise it doesn't exist! For this scenario we need more than just Salesforce DevOps (you can check my series on Salesforce and DevOps, if you haven't already), we need it to be across the full stack, to cover Kafka, Mulesoft and Kubernetes. This can maybe include some Terraform for creating and managing our infrastructure as code, maybe some Ansible to provision the servers and so on and so forth (looks like another post is needed here, with my personal beginner's view of how all these combine)

- Actually, we don't necessarily need to maintain all of these things ourselves, we can get them "as a service" with Heroku Kafka and the managed cloud version of Mulesoft, for example. There are also more alternatives like AWS IoT or AWS Kinesis + Lambda functions for a serverless approach to syphon the events into IoT cloud and back (thanks to Neil Procter for the comment, I missed this before!). These are awesome solutions if you have the money for it and/or compliance lets you!

- I could've added some Field Service Lightning (FSL), to schedule agents going to find and fix bridges, but that felt like too much for now!

- For winter 19 (safe harbour and all), there are a few features in preview that could make our solution better/nicer:

- High volume platform events, for getting more and more events into salesforce without breaking the limits (if it fits the use case, of course)

- Streaming API in a standard lightning component: if we need to add our events on particular pages, this would come handy

- IoT insights: would be nice to show this on our bridge assets, for example, to display where they are at any given time

- Other IoT features that are coming, as needed by the use case (of course!). IoT is quite cool, and getting better, in my opinion

Please let me know your thoughts, as usual, and see you in the next one!

I love this. Great article again mate!!

ReplyDeleteThanks Dave!

DeleteNice article and explain very well.

ReplyDeleteVery helpful blog... IoT orchestration platform that lets you enhance your productivity and efficiency across you're your entire enterprise or business.

ReplyDeleteNice article

ReplyDeletePega Training Hyderabad

Pega Online Training

This comment has been removed by a blog administrator.

ReplyDeleteThis blog is a great source of information which is very useful for me.

ReplyDeleteServicenow Training In Hyderabad

Thanks for sharing good content

ReplyDeleteServicenow Training In Hyderabad

This comment has been removed by a blog administrator.

ReplyDeleteI really liked your blog post.Much thanks again. Awesome.

ReplyDeleteMulesoft Self Learning

Mulesoft Online Course in Hyderabad